LangChain vs Custom Workflows: Picking the Right Framework for AI Agents

Anyone building AI agents today faces the same frustrating fork in the road. On one side, there’s LangChain: popular, well-documented, and ready to go. On the other, there’s the option of building custom AI agent workflows from scratch that is harder, slower, but incredibly flexible.

This isn’t just a technical decision. It impacts costs, speed to market, debugging headaches, and even long-term reliability. Too many teams rush in, pick one path, and only later realize they locked themselves into something that doesn’t scale.

So the question is: LangChain vs custom workflows — which one is right for your AI project? Let’s break it down without hype or empty promises. Teams often choose LangChain when they need quick prototyping, simplicity, and a framework that excels at linear task sequences, especially when project complexity is low to moderate.

Quick Primer: What is LangChain? What Are Custom Workflows?

LangChain is an open-source framework that helps developers connect large language models (LLMs) to external tools, APIs, and knowledge bases. Think of it as a Lego kit for building AI apps. It abstracts away a lot of complexity, making it easier to get an AI agent up and running.

Custom workflows skip the pre-built framework. Instead, engineers build their own orchestration logic, APIs, and data pipelines. It’s like building a house from scratch instead of buying a ready-to-assemble kit. More control, but also more work.

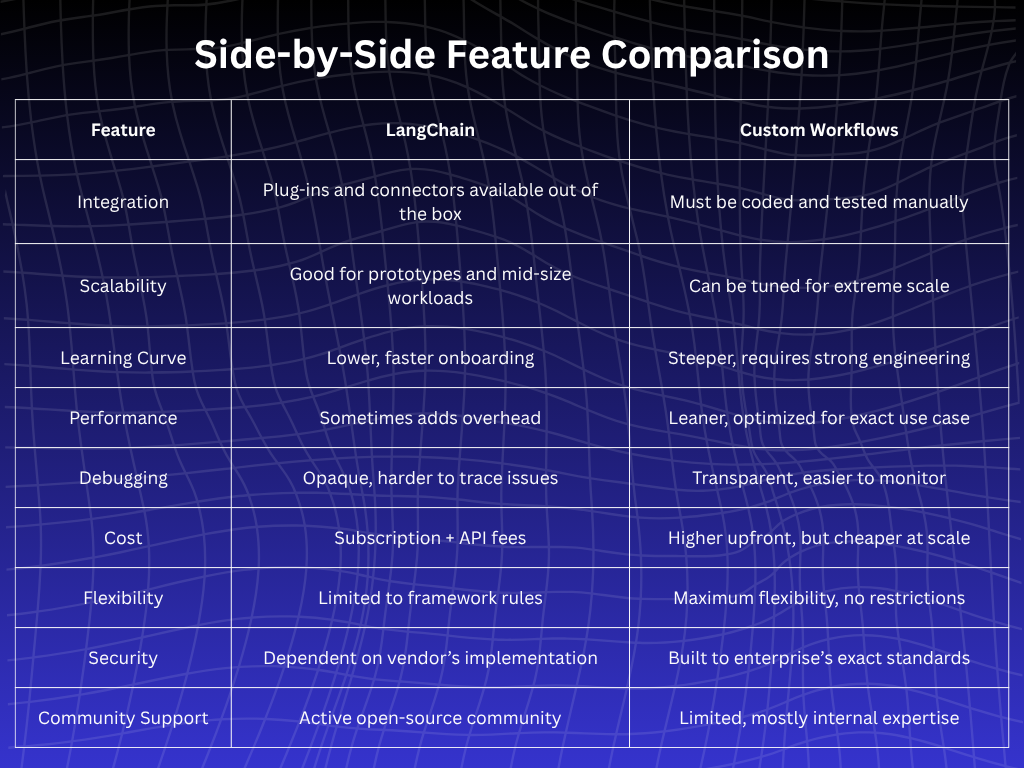

Side-by-Side Feature Comparison

Deep Dive Analysis

Here’s where things get interesting. Picking LangChain or building your own workflow sounds simple at first, but the deeper you go, the more surprises show up. Let’s unpack the real differences, the kind you only notice once the project is running in the wild.

1. Ease of Development

LangChain has become the go-to for teams that want to move quickly. The pre-built connectors to databases, APIs, and vector stores save weeks of work. A junior developer with decent Python skills can wire up a chatbot with document search in under a week. That’s why startups love it — speed to demo equals speed to funding.

But what people often overlook is what happens after the demo. Once you need custom logic, tight integration with legacy systems, or unusual orchestration flows, LangChain’s abstraction becomes a ceiling. Teams start fighting the framework instead of building with it.

Custom workflows demand more upfront thought: data pipelines, error handling, retry policies, monitoring. It feels heavy at first, but this “slowness” forces architectural clarity. The payoff shows scaling, debugging, and compliance are far easier when the foundation is solid.

2. Performance & Scalability

Abstraction is a double-edged sword. LangChain handles a lot for you, but that “magic” adds overhead. Every request passes through wrappers, middleware, and orchestration logic that weren’t built specifically for your use case. At a small scale, nobody notices. At enterprise scale, milliseconds add up. Multiply by millions of requests, and you’re suddenly staring at ballooning compute bills.

Custom workflows cut the fat. They let engineers pick lightweight APIs, async processing, and caching strategies tuned to the workload. For example, one fintech scaled a fraud-detection AI agent to handle 2 million queries daily. When they moved away from LangChain to a custom orchestrator, they cut latency by 40% and saved nearly $200k annually in GPU costs.

Something people don’t talk about enough: LangChain’s reliance on Python. Python is great for prototyping, but not always for high-performance workloads. Custom workflows let you use Go, Rust, or even C++ when raw speed matters.

3. Cost & Resource Use

Here’s where most teams trip up. LangChain feels inexpensive at first because you don’t need a large in-house team. But as usage grows, two hidden costs creep in:

- Framework overhead: extra compute from abstraction layers.

- Vendor/API lock-in: your system may lean heavily on one provider’s APIs (like OpenAI), making migration expensive later.

Custom workflows are front-loaded with cost you pay engineers to build the pipelines, monitoring systems, and connectors. But at scale, you save in two ways:

- Infrastructure efficiency: fewer wasted GPU cycles.

- Negotiating power: freedom to switch between LLM providers (OpenAI, Anthropic, Mistral, Llama) without being tied to LangChain’s design.

Few people mention this: enterprises often save millions over 3–5 years by eating the upfront custom engineering costs. That’s why banks, insurance firms, and healthcare giants rarely stick with LangChain for mission-critical systems.

4. Debugging & Reliability

One of the pain points with LangChain is the “black box” effect. Errors often bubble up without clear traces. Why did the agent fail? Was it the LLM call, the retriever, or a timeout in an external API? The logs don’t always tell you. Debugging turns into guesswork, which is stressful in production.

With custom workflows, every layer is owned and observable. Engineers can add structured logging, distributed tracing, and error alerts exactly where they want them. It’s more work to set up, but when things break (and they always do), the recovery time is dramatically shorter.

Here’s a detail people don’t always think about: auditability. In industries like healthcare and finance, you need a clear record of what the AI did, when, and why. LangChain doesn’t give you that out of the box. Custom workflows let you bake in audit trails that satisfy regulators.

5. Ecosystem & Support

LangChain benefits from a buzzing open-source community. There are ready-made templates, active Slack channels, and GitHub repos where you can grab example code. For early-stage teams, that’s gold. You don’t have to reinvent the wheel; you just adapt what’s already out there.

But there’s a flip side: LangChain moves fast. APIs change, features get deprecated, and breaking changes can appear between versions. A script that worked last month might fail today. That unpredictability frustrates enterprises that demand stability.

Custom workflows don’t have community safety nets. It’s your engineers building and maintaining everything. But the upside is long-term stability. Some enterprises even build internal “mini frameworks” so future projects reuse proven workflows, creating their own ecosystem without relying on external churn.

Another hidden angle: talent availability. Developers familiar with LangChain are easier to hire today because of its popularity. Engineers who can build robust custom orchestrators? Much rarer, and often more expensive. That talent gap itself becomes a cost consideration.

Multi-Agent Systems: Orchestrating Complex AI Workflows

As AI applications grow in sophistication, the need to coordinate multiple agents within a single system has become increasingly important.

Multi-agent systems allow developers to design complex workflows where several agents—each with specialized capabilities—work together to solve problems that would overwhelm a single agent. This approach is especially valuable for tasks that require collaboration, negotiation, or the integration of diverse external tools and data sources.

In practice, multi-agent systems unlock the ability to build truly dynamic AI workflows. Imagine a scenario where one agent handles user queries, another manages data retrieval from a vector store, and a third orchestrates API calls to external tools.

By distributing responsibilities, these systems can tackle complex workflows that involve conditional logic, complex branching, and even cyclical graphs—far beyond what linear workflows can achieve.

LLM frameworks like LangChain and LangGraph have made orchestrating multi-agent systems more accessible. LangChain, for example, provides building blocks for connecting agents to external tools, managing conversation history, and maintaining context across multiple steps.

LangGraph takes this further by enabling developers to define non-linear workflows, manage complex state transitions, and import state graphs that represent intricate agent interactions. These frameworks act as an orchestration layer, allowing multiple agents to communicate, share data, and coordinate tool usage without developers having to reinvent the wheel.

The key difference between frameworks like LangChain and LangGraph lies in the level of control and flexibility they offer for multi-agent workflows. LangChain makes it easy to start simple and scale up, but may feel overwhelming when managing complex multi-agent systems with fine-grained control requirements.

LangGraph, on the other hand, is well suited for developers who need granular control over agent workflows, state management, and the integration of multiple external tools.

Ultimately, the rise of multi-agent systems is reshaping how AI solutions are created. Whether you’re building a customer support platform with multiple specialized agents or orchestrating complex tasks across a suite of external APIs, choosing the right framework for your multi-agent workflows is critical.

LangChain and LangGraph both offer powerful solutions for orchestrating these systems, helping developers connect agents, manage complexity, and deliver robust AI applications at scale.

Use Cases & Real-World Scenarios

Best for LangChain:

- Startups that need prototypes quickly

- Teams without deep ML engineering backgrounds

- Use cases like customer support bots, knowledge retrieval, or document Q&A

Best for Custom Workflows:

- Enterprises with compliance-heavy industries like finance and healthcare

- Mission-critical AI agents that can’t afford downtime

- High-volume automation where every millisecond and dollar matters

Decision Framework

Think of it like a simple flowchart:

- Need to get to market fast? → LangChain.

- Need deep optimization and control at scale? → Custom workflows.

- Unsure? Start with LangChain, then migrate critical paths to custom workflows later.

Hybrid models are becoming the norm. Many teams use LangChain for orchestration but rely on custom-built components for security-sensitive or performance-heavy tasks.

Future Outlook

1. LangChain’s trajectory: It’s moving fast toward enterprise-readiness, adding better debugging, observability, and integrations. Expect it to become the “default choice” for fast builds.

2. Custom workflows’ strength: They’ll remain the go-to for enterprises that value control over convenience. Especially as regulations around AI tighten, expect custom workflows to dominate in compliance-heavy sectors.

3. The hybrid future: Most companies will mix both. LangChain will handle the orchestration layer, while custom workflows carry the critical workloads under the hood.

Conclusion

LangChain vs custom workflows, which one should businesses choose? The honest answer: it depends on speed vs control. LangChain is perfect for quick wins. Custom workflows win when the stakes are high.

At Ampcome, we help businesses balance building hybrid AI systems that don’t just work today but also scale tomorrow.

Thinking about AI agents for your business? Talk to Ampcome experts and get the right framework strategy.

FAQs

1. Is LangChain good for enterprise AI agents?

Yes, but only if speed and prototyping are the priority. For high-compliance or mission-critical use cases, enterprises often move to custom workflows.

2. What are the downsides of LangChain?

Abstraction layers can slow performance. Debugging is harder, and long-term costs can increase with heavy API usage.

3. When should you build custom AI workflows?

When control, compliance, and cost efficiency at scale matter more than speed of deployment.

4. Which is cheaper: LangChain or custom workflows?

LangChain is cheaper short-term. Custom workflows become more cost-effective at enterprise scale.

5. Can you combine LangChain with custom workflows?

Absolutely. Many teams start with LangChain for orchestration and plug in custom workflows for critical paths.

Transform Your Business With Agentic Automation

Agentic automation is the rising star posied to overtake RPA and bring about a new wave of intelligent automation. Explore the core concepts of agentic automation, how it works, real-life examples and strategies for a successful implementation in this ebook.

More insights

Discover the latest trends, best practices, and expert opinions that can reshape your perspective

Contact us