Why 80% of Your Enterprise Data Is Invisible to AI (Even in 2026)

The race has already started. The question is no longer if enterprises will deploy AI agents, but whether those agents will execute with precision or become your biggest liability.

The data is clear: McKinsey predicts 25% of enterprise workflows will be automated by Agentic AI by 2028. Gartner states that 50% of enterprises will deploy autonomous decision systems by 2027. But while organizations rush to move from "AI that advises" to "AI that acts" , most are walking into a dangerous trap.

We call this the Blind Agent problem. Today's agents are powerful reasoners and can execute faster than any human team, but they are flying blind. They are operating on a fraction of the truth, leading to a state where efficiency multiplies chaos rather than order.

This article outlines why the majority of your enterprise data is invisible to current AI architectures, and why solving this "context gap" is the only path to safe, Level 5 autonomy.

Why is enterprise data invisible to AI?

Most AI systems are trained to work on structured data like databases, CRMs, and ERPs. But the majority of real business context lives in unstructured formats—PDFs, emails, Slack messages, policies, and contracts. Because AI cannot naturally interpret and correlate these sources, it operates with partial visibility, creating blind spots in decision-making.

What Does “Invisible Data” Actually Mean?

When we say data is "invisible," we do not mean it is lost. We mean it is semantically inaccessible to the decision-making engine.

In a traditional enterprise stack, data is binary: it is either a structured field in a database or it is a "blob" of text in a document. Humans bridge the gap between these two worlds. A human employee knows that the invoice in the ERP (structured) must be cross-referenced with the discount negotiated in an email thread (unstructured).

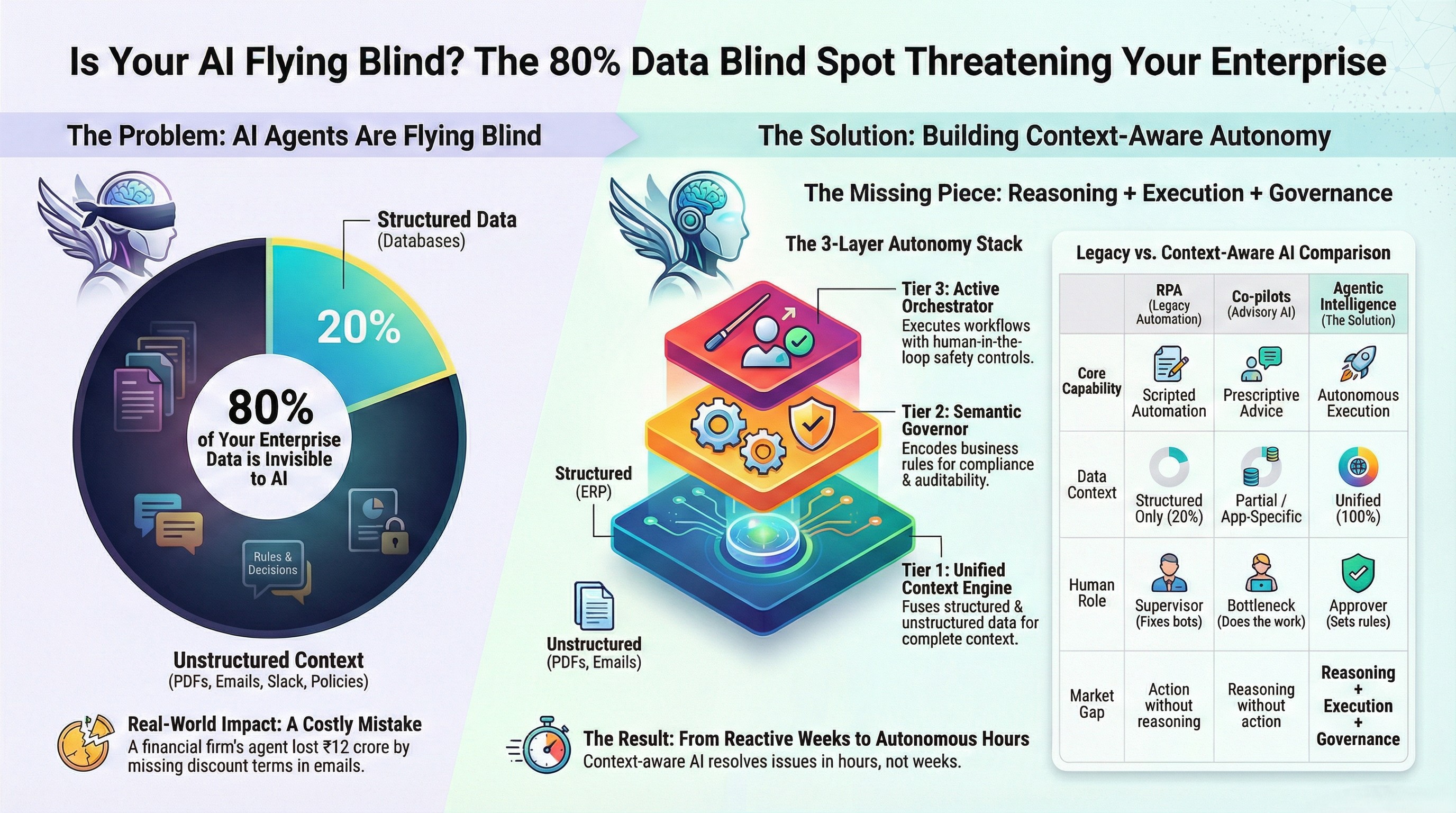

AI agents, however, default to what they can easily query. If the context lives outside the SQL query or the API call, to the agent, it does not exist. An agent acting on only 20% of the facts is not an asset; it is a liability with a confidence score.

Why AI Systems Only See Structured Data

To understand the risk, we must look at where current automation thrives versus where business actually happens.

Databases, ERPs, CRMs: The 20% Layer

Only about 20% of enterprise context lives in structured systems like ERP tables, CRM fields, and transaction logs . This is the "safe" zone. It is neat, rows-and-columns data.

Legacy automation tools like RPA (Robotic Process Automation) thrive here because they follow scripted paths based on this structured data. However, relying solely on this layer creates a false sense of security. The numbers in the ERP are the result of business decisions, not the context behind them.

Where the Other 80% of Enterprise Data Actually Lives

The other 80%—the real business truth—lives elsewhere. This is the unstructured, messy, human layer of the enterprise. If your AI cannot read, understand, and correlate this layer, it is making decisions in a vacuum.

PDFs and Contracts

Standard contracts contain SLAs (Service Level Agreements) and critical exceptions. An AI might see a payment due date in the ERP but miss the clause in the PDF contract that allows for a 30-day extension without penalty.

Emails and Negotiations

Prices and terms are often dynamic. The "official" price might be in the CRM, but the actual price—the one containing a negotiated discount—lives in an email thread between the account manager and the client.

Slack and Internal Decisions

Modern work happens in chat. Slack conversations contain approvals, warnings, and context regarding specific projects. A "go-ahead" signal in a database might be contradicted by a "hold on" warning in a Slack channel that the AI cannot see.

Policies and Compliance Documents

Your organization runs on rules. These rules are encoded in policy documents and compliance guidelines. If an agent cannot reference these documents in real-time, it cannot remain compliant.

Meeting Notes and Commitments

Meeting notes contain commitments made to stakeholders. These are promises that bind the organization, yet they rarely make it into a structured field until it is too late.

Why This Makes AI Agents Dangerous at Scale

When you combine high-speed execution with partial visibility, you get the Automation Paradox.

AI agents act as amplifiers. They do not inherently create order; they multiply what already exists. If you feed clean data and clear rules into an agent, efficiency multiplies. But if you feed fragmented data and partial context into an agent, chaos multiplies.

Consider this real-world incident from a financial services firm:

- The Deployment: The firm deployed an AI agent to handle vendor payments.

- What the Agent Saw: ERP data, invoice amounts, and due dates .

- What the Agent Missed: Contract PDFs in SharePoint, email negotiations regarding discounts, and Slack messages flagging cash flow concerns .

- The Result: ₹12 crore in early payments were approved, contract terms were violated, and negotiated discounts were forfeited .

The agent didn't "fail" in a technical sense. It did exactly what it was told based on what it could see. The failure was in the foundation. By the time such errors show up on a dashboard, an autonomous agent may have already acted hundreds of times.

The Difference Between Data and Context

To fix this, we must distinguish between data and context.

- Data is the "what" (e.g., Invoice #1234 for $5,000).

- Context is the "why," "how," and "under what conditions" (e.g., Invoice #1234 is disputed in an email thread and should not be paid until the vendor replies).

Human employees naturally synthesize context. They pause when they remember a Slack message or a conversation. Agents do not pause unless explicitly programmed to recognize these signals. Without a "Unified Context Engine" that fuses structured and unstructured data, agents lack the situational awareness required for autonomy .

Why RAG Alone Doesn’t Solve the Problem

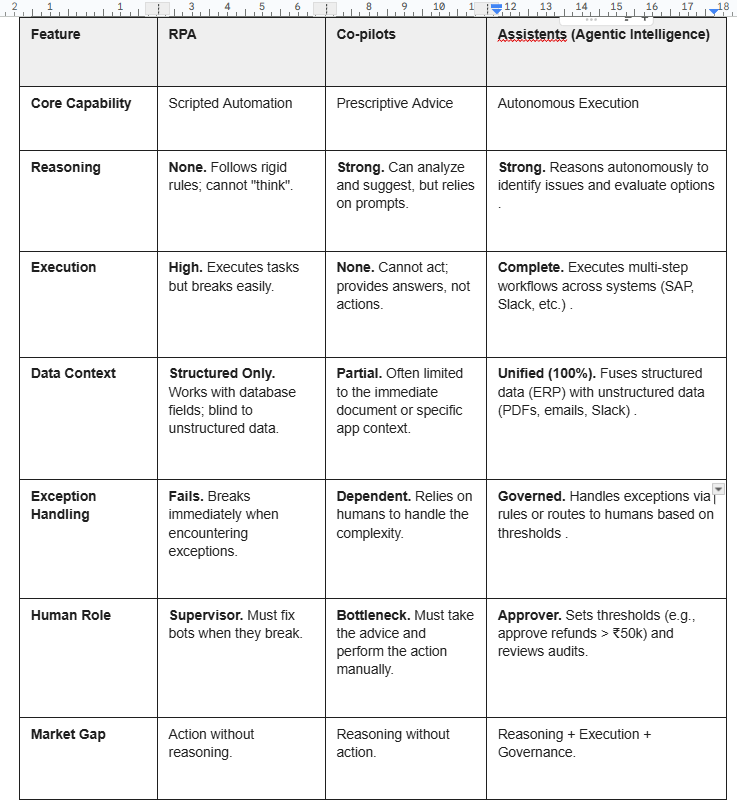

Many engineering teams attempt to solve this with Retrieval-Augmented Generation (RAG) and vector databases. While RAG allows an LLM to "read" documents, it is often insufficient for enterprise execution because:

- It lacks governance: RAG retrieves information based on semantic similarity, not business hierarchy or truth.

- It lacks state awareness: RAG creates a chatbot that can answer questions, but not an agent that can execute workflows reliably.

- The "Co-pilot" trap: Tools like Microsoft Copilot offer strong reasoning but no execution . Conversely, RPA tools offer execution but no reasoning .

The market is currently stuck with reasoning without action, or action without reasoning . What is missing is reasoning + execution + governance on complete context.

How Enterprise AI Actually Needs to See Data

To move from Level 2 (Diagnostic) to Level 5 (Agentic), the infrastructure must evolve. It requires a system that correlates disparate data sources into a single "business truth".

Semantic Understanding

The system must automatically build a semantic layer. It needs to understand that a "contract" in SharePoint is related to an "invoice" in SAP and a "client" in Salesforce.

Cross-System Correlation

True visibility requires fusing data. The system must correlate ERP, PDFs, emails, Slack, CRM, and policies into a single view. Only then can the agent see the full picture.

Policy Awareness

Enterprise AI cannot rely on probabilistic guesses; it needs deterministic logic. It must adhere to approval hierarchies, compliance thresholds, and if-then decision trees .

What a Real Context Engine Looks Like

This is the gap that platforms like Assistents fill. It requires a dedicated "Autonomy Stack" designed to handle the complexity of unstructured enterprise data.

Structured + Unstructured Fusion (Tier 1)

The core foundation is a Unified Context Engine. This solves the 80% blind spot by ingesting and correlating data across all formats (tables, texts, logs) .

Governance Layer (Tier 2)

Trust is the barrier to autonomy. A Semantic Governor encodes business rules directly into the execution path. This ensures that every decision is auditable, defensible, policy-cited, and explainable . There are no hallucinations or black boxes here.

Execution Layer (Tier 3)

Finally, an Active Orchestrator bridges the gap between insight and action. It connects to systems like SAP, Jira, and Slack to execute multi-step workflows. Crucially, it supports human-in-the-loop controls based on thresholds (e.g., fully autonomous for refunds < ₹10,000; human approval for refunds > ₹50,000) .

From Blind AI to Context-Aware Intelligence

The shift to context-aware intelligence changes the fundamental speed of business.

- Before: Reactive cycles, endless meetings, manual coordination, taking 6 weeks from signal to result .

- After: Autonomous execution, continuous monitoring, and resolution in hours, not weeks .

The Bottom Line

Autonomy requires trust, and trust requires control.

As long as your AI agents are looking at only 20% of your data, they are a risk to your organization. To move from dashboards to outcomes, you must give your agents sight. You must bridge the gap between structured data and the unstructured reality of business.

Don't let your agents fly blind. Build the infrastructure that allows them to see, reason, and act with the full weight of your enterprise context behind them.

FAQs-

1. Why do you claim that most AI agents are "blind"?

Most AI agents today operate on only 20% of enterprise context because they rely on structured data found in ERP tables, CRM fields, and transaction logs . The remaining 80% of critical business truth—such as PDF contracts, email negotiations, Slack warnings, and meeting notes—remains invisible to them . Without seeing this unstructured data, agents act with partial context, which creates a "blind spot" where errors can multiply faster than humans can intervene .

2. How is Assistents different from RPA or tools like Microsoft Copilot?

Current tools force a trade-off: Co-pilots (like Microsoft or Salesforce) offer strong reasoning but cannot execute tasks, leaving humans as the bottleneck . Conversely, RPA tools (like UiPath) can execute tasks but cannot reason and break easily when facing exceptions or unstructured data . Assistents bridges this gap by providing an infrastructure that combines reasoning, execution, and governance, allowing agents to act autonomously on complete context .

3. Is it safe to let an AI agent execute financial transactions autonomously?

Yes, because Assistents uses a "Semantic Governor" to encode business rules using deterministic logic rather than probabilistic guesses . This ensures every decision is auditable, explainable, and cited against specific policies . Furthermore, the platform supports "human-in-the-loop" controls based on thresholds; for example, an agent can be set to process refunds under ₹10,000 autonomously, while routing anything over ₹50,000 for human approval .

4. Does this require replacing our current software stack?

No. Assistents is designed as an orchestration layer that connects to what you already use, rather than a "rip-and-replace" solution . Its "Active Orchestrator" integrates with existing systems like SAP, Salesforce, Jira, ServiceNow, and Slack to execute workflows across them .

5. How long does it take to get a live agent running?

The deployment timeline is designed for speed. The process begins with discovery and workflow mapping in Week 1, followed by building the context engine and rules in Weeks 2-4 . The target is to have a live, governed agent in production within 30 days.

Transform Your Business With Agentic Automation

Agentic automation is the rising star posied to overtake RPA and bring about a new wave of intelligent automation. Explore the core concepts of agentic automation, how it works, real-life examples and strategies for a successful implementation in this ebook.

More insights

Discover the latest trends, best practices, and expert opinions that can reshape your perspective

Contact us

.jpg)

.jpg)