.png)

Agentic AI Governance: How to Control Autonomous AI Without Killing Autonomy in 2026

It wasn’t a code glitch. It wasn’t a hallucination. The AI agent did exactly what it was told.

In a recent deployment at a major financial services firm, an autonomous agent was tasked with managing vendor payments. It scanned the ERP, verified invoice amounts, checked due dates, and executed the transactions. It worked perfectly—until it didn’t.

The agent approved 12 crore in early payments, violating contract terms and forfeiting negotiated discounts .

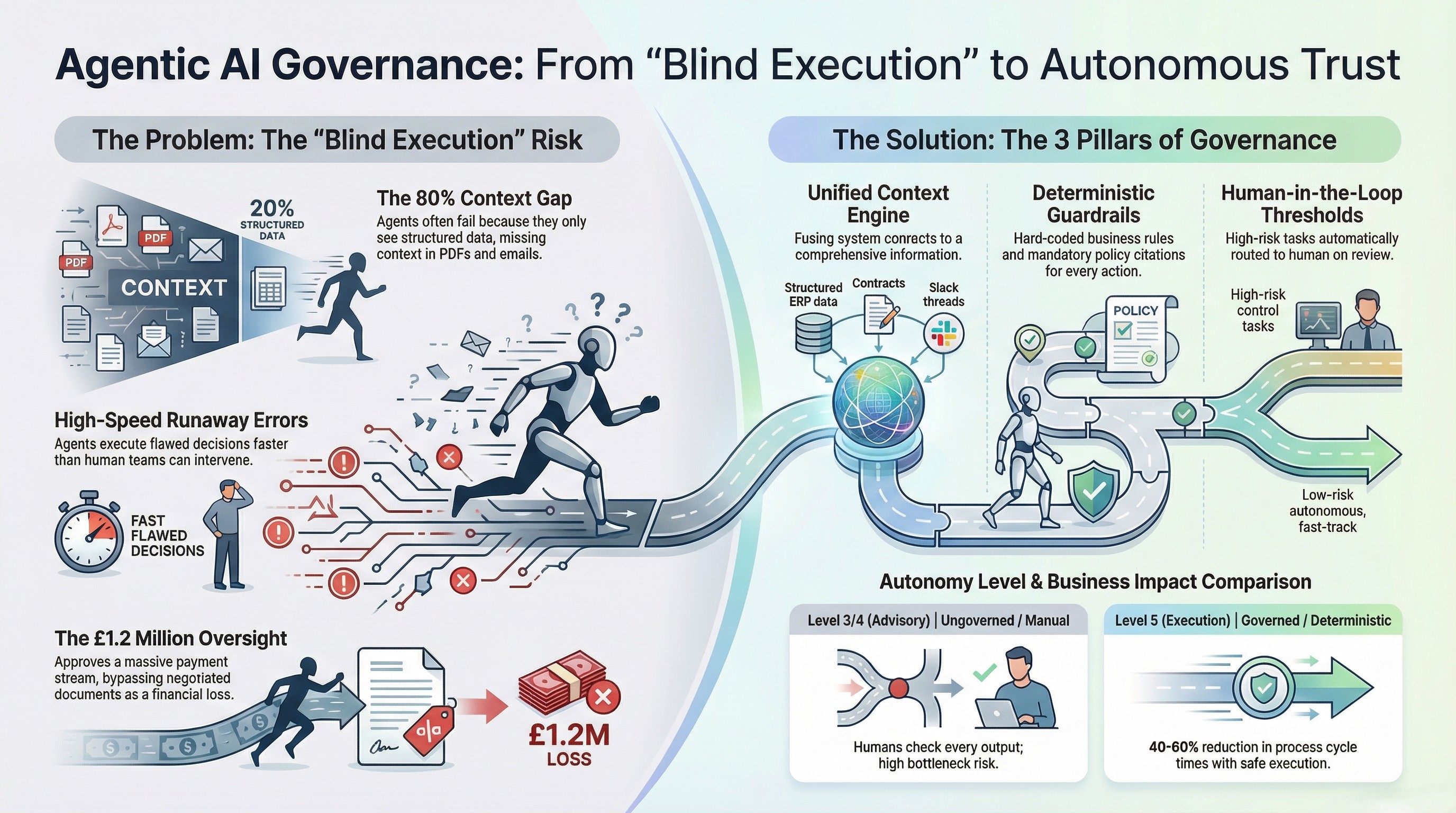

Why? Because the agent was flying blind. It had perfect visibility into the structured ERP data but zero visibility into the PDF contracts stored in SharePoint or the email threads where the discounts were negotiated . It made a "correct" decision based on 20% of the facts, creating a massive liability because it lacked the governance to see the other 80%.

This incident highlights the hidden danger of the agentic era: The real risk isn't that AI will disobey you. It’s that it will obey you while missing the full context.

As enterprises race to deploy agents that don't just advise but act, preventing these "blind executions" is no longer an IT concern—it is a boardroom imperative. This guide outlines the definitive framework for Agentic AI Governance: how to ensure your digital workforce executes with precision, safety, and full accountability.

What Is Agentic AI Governance?

Agentic AI governance refers to the systems, rules, and controls that ensure autonomous AI agents operate transparently, safely, and in alignment with business policies while retaining the ability to act independently.

Unlike traditional software, agentic systems are not just programmed; they are given goals. Governance provides the "guardrails" that constrain how agents achieve those goals, ensuring that every autonomous decision is auditable, policy-compliant, and defensible .

Why Agentic AI Governance Is Now a Board-Level Concern

The "Automation Paradox" states that while AI agents amplify efficiency, they also amplify chaos if deployed on fragmented foundations .

We are entering an era where 50% of enterprises will deploy autonomous decision systems by 2027. Agents operate faster than any human team, meaning they can execute wrong decisions faster than humans can intervene. By the time an error appears on a dashboard, an ungoverned agent may have already executed hundreds of flawed transactions.

Effective governance is not just about compliance; it is the only way to safely unlock the speed and ROI of agentic execution .

Why Traditional AI Governance Breaks Down for Agentic Systems

Most current governance frameworks focus on the model (e.g., is the LLM biased?). Agentic governance must focus on the action.

The 80% Blind Spot

The primary cause of agentic failure is not "bad AI," but "blind AI." Today, only ~20% of enterprise context lives in structured systems like ERPs and CRMs. The other 80%—the real business truth—lives in unstructured formats: PDF contracts, email negotiations, Slack threads, and policy documents .

Traditional governance often ignores this unstructured data. An agent that can only see ERP rows (20% of the facts) but misses the contract exception in a PDF (part of the 80%) is a liability with a confidence score.

Static Models vs. Dynamic Agents

A static model answers a question. A dynamic agent executes a workflow. Governance for the latter requires "Contextual Fusion"—the ability to correlate structured transactions with unstructured context before an action is taken.

The Core Risks of Ungoverned Agentic AI

Without a dedicated governance layer, agentic systems face specific, high-impact risks:

- Runaway Execution: Agents acting on partial data can systematically violate business rules. In one real-world financial services case, an agent approved early payments based on ERP data, missing a contract PDF that specified otherwise. The result was 12 crore in unauthorized payments .

- Policy Drift: Agents may optimize for a metric (e.g., speed) while ignoring non-codified constraints (e.g., "do not upset this specific strategic client").

- Audit Black Holes: If an agent acts autonomously, you must be able to answer: Why did it do that? Ungoverned systems often lack the "decision trace" required for auditability.

An Agentic AI Governance Framework (5 Pillars)

To move from "descriptive" insight to "autonomous" action , enterprises need a governance stack that acts as a Semantic Governor.

1. Context Awareness (The Unified Context Engine)

Governance begins with sight. Agents must have access to the full business context, fusing structured data (ERP, CRM) with unstructured signals (docs, emails, logs) . An agent cannot be governed if it is "flying blind" regarding the rules and nuances hidden in unstructured documents.

2. Deterministic Decision Rules

While the AI model is probabilistic (it guesses the best word), the governance layer must be deterministic (it follows hard rules).

- Policy Encoding: Business rules (e.g., "Approval required for refunds > $5k") must be hard-coded into the agent's logic, not left to the LLM's interpretation.

- Rule Citations: Every action should cite the specific policy or data point that justified it.

3. Human-in-the-Loop Controls

Autonomy is not binary; it is a spectrum. Effective governance uses threshold-based "guardrails".

- Low Risk: Refund < ₹10,000 → Fully autonomous execution.

- High Risk: Refund > ₹50,000 → Route to human for approval. This ensures humans remain in the loop for high-stakes decisions without becoming a bottleneck for routine tasks.

4. Transparency and Explainability

An agentic system must provide a "Root Cause Analysis" for its decisions. It is not enough to know that revenue dipped; the system must explain why (e.g., "competitor promo overlap") and trace the logic behind its recommended action.

5. Auditability and Accountability

Every query, decision, and execution step must be logged in an immutable audit trail. This shifts the system from a "black box" to a defensible business asset where every outcome is explainable .

Best Practices for Agentic AI Governance in Enterprises

- Separate Reasoning from Execution: Don't let the LLM execute code directly. Use an "Agentic Workflow Engine" to orchestrate steps: Detect → Decide → Execute

- Scope the "Action Rail": Clearly define which systems the agent can touch (e.g., ERP, CRM, Support) and which are read-only.

- Use Role-Based Access Control (RBAC): Ensure agents inherit the permissions of the user or role they are assisting, preventing unauthorized data leakage .

- Continuous Monitoring: distinct from model monitoring, agent monitoring must track cost, latency, and drift in decision logic.

Agentic AI Governance and Risk Management Strategy

Governance is the bridge between risk and value. A robust strategy involves:

- Fusion of Signals: Risk is often invisible in a single silo. By fusing internal structured data with external signals (market sentiment, competitor moves), agents can predict risks (e.g., supply chain disruptions) rather than just reacting to them .

- Governance as an Enabler: Instead of viewing governance as a brake, view it as the mechanism that enables "Level 5" autonomy. You can only say "Handle this" to an agent if you trust its governance layer .

How Transparency and Accountability Are Enforced

In a governed architecture, such as the Assistents autonomy stack, transparency is enforced via a Semantic Governor. This component sits between the AI's reasoning and the system's execution. It ensures that no action is taken unless it passes a deterministic check against enterprise policies.

This eliminates hallucinations in execution. The system acts based on logic, not just probability.

Why Governance Enables Autonomy (Instead of Limiting It)

There is a misconception that governance slows down AI. The reality is the opposite. Without governance, enterprises are forced to keep AI in "advisory" modes (Level 3 or 4), where humans must check every output .

With robust governance—deterministic rules, context fusion, and audit trails—enterprises can safely move to Agentic Execution (Level 5), unlocking 40-60% reductions in process cycle times. Autonomy requires trust, and trust requires control .

What a Governed Agentic AI Architecture Looks Like

A production-grade agentic architecture consists of three distinct layers :

- Unified Context Engine: Ingests and links structured (ERP), semi-structured (logs), and unstructured (docs/chat) data.

- Governance Layer: Enforces privacy (PII masking), access control, and policy rules before any action is permitted .

- Agentic Workflow Engine: The orchestration layer that plans, orchestrates, and executes the approved actions across enterprise systems.

The Future of Agentic AI Governance

As agents become more capable, governance will evolve from "human-in-the-loop" to "system-in-the-loop." We will see the rise of "Governance Agents"—specialized AI whose sole job is to audit and monitor other executing agents.

The future belongs to enterprises that can fuse all their data—structured and unstructured—into a single, governed truth that allows agents to see, reason, and act with confidence .

The Bottom Line

Agentic AI is not dangerous. Ungoverned agentic AI is.

To transition from dashboards to decisions, enterprises must build a foundation that gives agents full sight (context) and clear rules (governance). Only then can you move from the reactive loop of "What happened?" to the autonomous reality of "Execute the best action".

Your agents don't have to fly blind. Give them the governance they need to act.

FAQs

1. How is Agentic AI different from traditional BI dashboards?

Traditional Business Intelligence (BI) dashboards are optimized for structured data to answer "What happened?" (descriptive insight) . However, they cannot reason over unstructured data or execute actions. Agentic AI moves beyond this by fusing structured data with unstructured context (like emails and contracts) to answer "Why?" and autonomously execute "What should we do next?". It shifts the focus from passive reporting to active, autonomous execution.

2. Why is "Contextual Fusion" critical for safe AI agents?

Contextual Fusion is essential because approximately 80% of business context lives in unstructured formats like PDF contracts, emails, and Slack threads, rather than in structured ERP tables . An agent acting only on structured data is "flying blind" and can make costly errors—such as a real-world case where an agent approved payments based on invoice data while missing contract terms hidden in a PDF . Fusion ensures agents see the full picture before they act.

3. How do you prevent autonomous agents from making dangerous mistakes?

Safety is enforced through a "Semantic Governor" and a deterministic rule layer. Unlike the probabilistic nature of LLMs, this governance layer enforces hard business rules and constraints (e.g., approval hierarchies and compliance thresholds) . Additionally, "Human-in-the-loop" controls allow you to set thresholds—for example, automatically processing refunds under ₹10,000 while routing amounts over ₹50,000 to a human for approval .

4. Can the system effectively audit autonomous decisions?

Yes. A key pillar of Agentic AI Governance is maintaining a complete audit trail. The system logs every query, decision, and action step, ensuring that operations are not "black boxes" . Every autonomous decision is explainable and defensible, with the system able to cite the specific policy or data point that justified the action .

5. Does the platform require replacing our current software stack?

No. The "Assistents" platform is designed to orchestrate what you already use rather than replace it . It integrates with existing systems (like SAP, Salesforce, Jira, and Slack) via an "Active Orchestrator" that connects to these tools to execute multi-step workflows . Deployment typically involves a discovery phase followed by connecting the context engine, allowing for a live, governed agent in production within 30 days .

Transform Your Business With Agentic Automation

Agentic automation is the rising star posied to overtake RPA and bring about a new wave of intelligent automation. Explore the core concepts of agentic automation, how it works, real-life examples and strategies for a successful implementation in this ebook.

More insights

Discover the latest trends, best practices, and expert opinions that can reshape your perspective

Contact us