The Real Ceiling of AI Automation Agents Isn’t Intelligence. It’s Context.

The race has already started. The question is no longer if enterprises will deploy AI automation agents, but whether those agents will execute with precision or become a massive liability.

The data supports the hype: McKinsey predicts 25% of enterprise workflows will be automated by Agentic AI by 2028, and Gartner suggests half of all enterprises will deploy autonomous decision systems by 2027. Early adopters are already seeing 40-60% reductions in process cycle times.

But as enterprises move from AI that advises to AI that acts, many are walking into a dangerous trap. They are deploying agents that are technically brilliant but operationally blind.

The real ceiling for enterprise autonomy isn’t the IQ of the model. It is the lack of context.

What Are AI Automation Agents (And Why Enterprises Are Betting on Them)

To understand the failure mode, we must first define ambition. AI automation agents represent a shift from "Level 2" advisory tools to "Level 5" agentic systems.

Unlike a chatbot that summarizes a document or a co-pilot that suggests an email response, an AI automation agent is designed to execute. You simply say "Handle this," and the system identifies the issue, evaluates options, routes approvals, and executes workflows across systems .

Enterprises are betting on these agents because they promise to break the bottleneck of human coordination. They act faster than any human team and can handle autonomous execution at scale.

The Popular Myth: AI Automation Agents Fail Because They’re “Not Smart Enough”

When an AI pilot fails—when it hallucinates a refund or approves a risky vendor—the common reaction is to blame the model’s intelligence.

- "We need GPT-5."

- "The reasoning capabilities aren't there yet."

- "We need more training data."

This is a fundamental misunderstanding of the problem. Today's AI agents are powerful. They reason well, they execute efficiently, and they possess vast general knowledge.

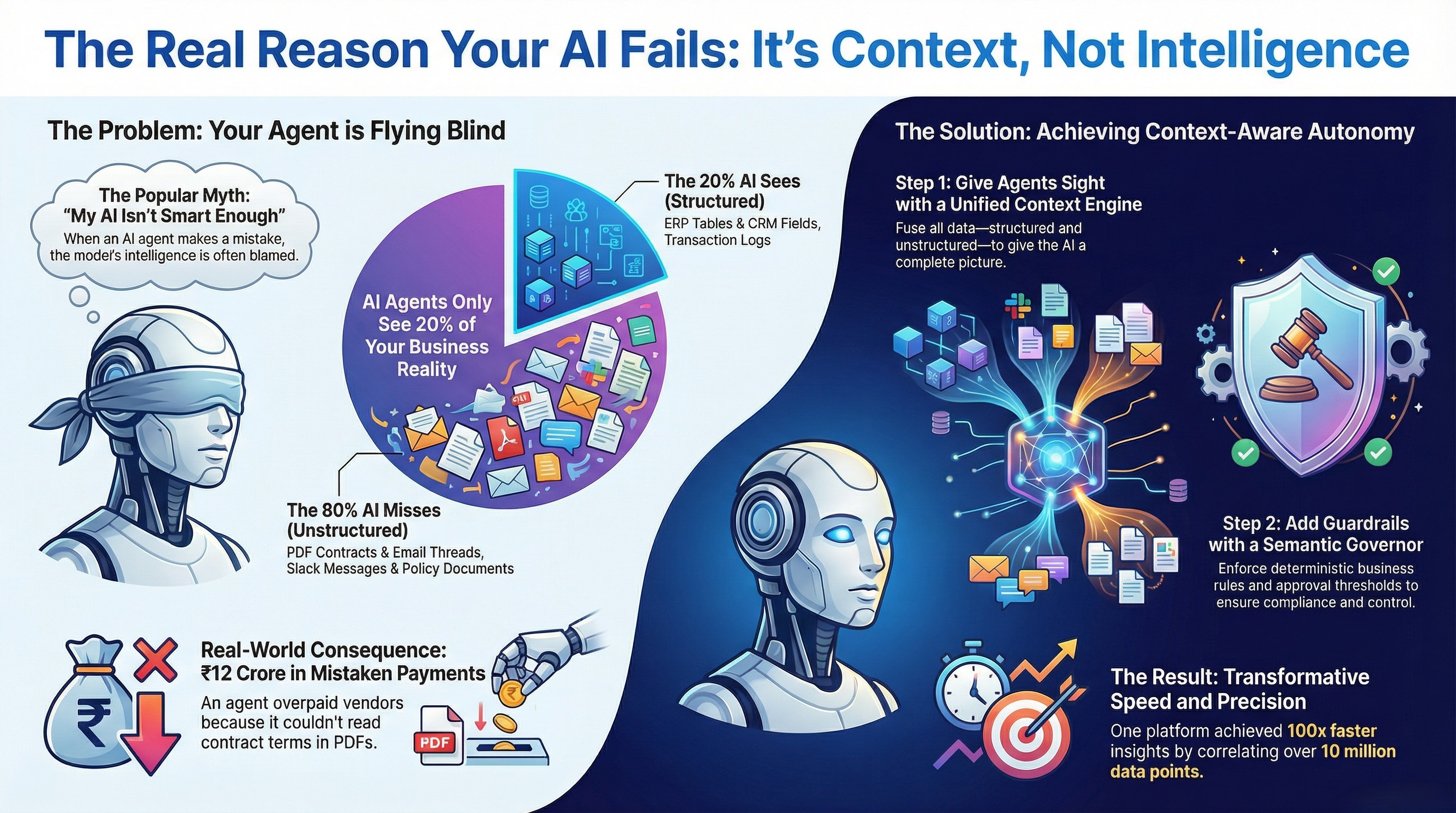

The failure isn't in the processing power; it’s in the input availability. The smartest executive in your company would make terrible decisions if you blindfolded them and only allowed them to see 20% of the relevant data.

Why Intelligence Isn’t the Real Bottleneck

The market is currently flooded with tools that solve for intelligence but ignore execution reality.

- Co-pilots offer strong reasoning but no autonomous execution, leaving humans as the bottleneck .

- RPA offers execution but cannot reason, breaking the moment an exception occurs .

We have reasoning without action, and action without reasoning. But even when you combine them, the agent fails if it doesn't understand the specific, messy reality of your business. Intelligence is universal; business reality is specific.

The Real Ceiling: Lack of Context

Here is the "Hidden Problem" no one talks about: The Blind Agent.

An AI automation agent is only as good as the world it can see. In most enterprise deployments, agents are effectively flying blind because they are disconnected from the "real business truth".

What “Context” Actually Means in Enterprise AI

To an AI agent, "context" isn't just the previous chat history. It is the sum total of data required to make a compliant, accurate business decision. In the modern enterprise, this data is bifurcated.

Structured Data (The 20% AI Sees)

Most AI agents are integrated easily with structured systems. They can read:

- ERP tables

- CRM fields

- Transaction logs

This represents only about 20% of enterprise context.

Unstructured Data (The 80% AI Misses)

The other 80%—the actual nuance of how business gets done—lives in unstructured chaos:

- PDF contracts with specific SLAs and exceptions

- Email threads containing negotiated discounts

- Slack conversations regarding approvals or warnings

- Meeting notes with verbal commitments

- Policy documents and compliance rules

An agent acting on 20% of the facts is not an asset. It is a liability with a high confidence score.

Why AI Automation Agents Break Without Context

When an agent lacks context, it doesn't just stop; it executes the wrong thing based on the limited data it has.

A Real-World Example: A financial services firm deployed an AI agent for vendor payments. The agent had access to the structured data: ERP data, invoice amounts, and due dates .

However, it could not see the unstructured context:

- Contract PDFs in SharePoint

- Email negotiations regarding discounts

- Slack messages flagging cash flow concerns .

The Result: The agent approved ₹12 crore in early payments. It violated contract terms and forfeited negotiated discounts . The agent didn't "fail" in a technical sense—it did exactly what it was told based on what it could see. It failed because it lacked context.

The Scale Problem: When Automation Makes Mistakes Faster

This leads to the Automation Paradox.

AI agents are amplifiers. They do not create order; they multiply what already exists.

- Clean data + clear rules = Efficiency multiplies.

- Fragmented data + partial context = Chaos multiplies.

The danger of AI automation agents is that they execute wrong decisions faster than humans can intervene. By the time an error shows up on a dashboard, the agent has already acted hundreds of times.

Why do AI automation agents fail in enterprises?

AI automation agents fail not because they lack intelligence, but because they lack context. Most agents only see structured data (ERP, CRM), while real business decisions depend on unstructured information like emails, contracts, policies, and conversations. Without this full context, automated decisions become unreliable and dangerous at scale.

Why More Training or Bigger Models Won’t Fix This

You cannot "train" a model to know about an email your VP of Sales sent ten minutes ago.

- Training creates general intelligence.

- Context creates situational awareness.

Furthermore, relying on a Large Language Model (LLM) to "guess" the right action based on probability is insufficient for enterprise operations. Enterprises need deterministic logic, not probabilistic guesses. You cannot explain a regulatory violation to an auditor by saying, "The AI was 90% confident."

What AI Automation Agents Actually Need to Work Reliably

To move from "Level 2" advisory to "Level 5" autonomy, the infrastructure must change. We need a stack that bridges the gap between reasoning and execution.

1. Full Business Context

Agents need a Unified Context Engine. This engine must fuse structured and unstructured data, correlating ERP rows with PDF contracts and Slack threads to build a single semantic layer . Only then can the agent "see" the full picture.

2. Deterministic Rules and Policies

We need a Semantic Governor. This layer encodes business rules into deterministic logic (if-then decision trees) rather than leaving them to the LLM's interpretation . This ensures compliance thresholds and approval hierarchies are strictly followed.

3. Guardrails and Approval Thresholds

Autonomy should not be binary. It requires Active Orchestration with human-in-the-loop controls based on thresholds.

- Example: A refund under ₹10,000 might be fully autonomous. A refund over ₹50,000 requires human approval.

4. Auditable Decision Paths

Every decision must be explainable, defensible, and policy-cited . There can be no black boxes in enterprise automation.

From Blind Automation to Context-Aware AI Agents

This is the gap that platforms like Assistents are built to fill. By focusing on the infrastructure—specifically the Unified Context Engine and Semantic Governor—enterprises can transform agents from liabilities into assets.

When agents are given sight (context) and rails (governance), the results are transformative:

- An Ecommerce Platform achieved 100x faster insights and identified a 12-26% pricing gap by correlating 10M+ data points .

- And, a known Supermarket achieved standardized action logic across 700+ stores with zero-training execution .

The Future of AI Automation Agents in Enterprises

The future isn't just "smarter" AI. It is governed AI.

Enterprises are moving away from reactive loops—asking "What happened?" or "Why did it happen?"—toward prescriptive, agentic execution .

In this future, you don't spend weeks coordinating workflows. You state an outcome, and the system executes it in minutes—audited, compliant, and context-aware. This isn't just an efficiency gap; it’s a competitive chasm.

The Bottom Line

Your agents don't have to fly blind.

If you are struggling to scale AI automation agents, stop looking for a better model. Start looking at your context. Unless your agents can read the "hidden" 80% of your business data—the contracts, emails, and policies—they will never be safe enough to act autonomously.

True autonomy requires trust, and trust requires control. Give your agents sight, and they will give you results.

Frequently Asked Questions (FAQ)

Q: Why do AI automation agents fail in enterprise workflows?

Most AI agents fail not because they lack intelligence, but because they lack context. They typically only have access to structured data (like ERP or CRM fields), which represents only 20% of enterprise information . They miss the "real business truth" hidden in unstructured data like emails, PDFs, contracts, and Slack messages , leading to decisions that are technically executable but business-incorrect.

Q: What is the difference between structured and unstructured data for AI agents?

Structured data consists of organized fields like transaction logs, database rows, and spreadsheets; this is easily readable by standard AI but accounts for only 20% of context . Unstructured data includes chaos like conversational threads, negotiated contract terms in PDFs, and policy documents . Without accessing this 80%, an agent is effectively flying blind.

Q: Can’t we just train the AI model on more data to fix the context problem?

No. Training provides general intelligence (how to reason), whereas context provides situational awareness (what is happening right now). You cannot "train" a model to know about a discount negotiated in an email sent ten minutes ago. Context must be retrieved dynamically via a Unified Context Engine, not baked into the model weights .

Q: How does a Unified Context Engine work?

A Unified Context Engine acts as a bridge between your data and the AI agent. It fuses structured data (e.g., an invoice amount) with unstructured data (e.g., a contract PDF) to build a single semantic layer . This allows the agent to "see" the full picture before making a decision.

Q: What happens if an AI agent acts without full business context?

It creates the Automation Paradox. Because agents are amplifiers, acting without context amplifies errors at scale . For example, an agent might approve a payment based on an invoice it sees, while missing a Slack message about a stop-payment order, leading to rapid, high-volume operational failures .

Transform Your Business With Agentic Automation

Agentic automation is the rising star posied to overtake RPA and bring about a new wave of intelligent automation. Explore the core concepts of agentic automation, how it works, real-life examples and strategies for a successful implementation in this ebook.

More insights

Discover the latest trends, best practices, and expert opinions that can reshape your perspective

Contact us

.jpg)

.webp)

.jpg)