Top 8 Agentic AI Use Cases in Data Engineering (2025 Guide)

Picture this: It’s Monday morning, and a data engineer opens their dashboard only to see red alerts everywhere. Pipelines are failing, data sources are acting up, and the team is scrambling to fix things. Sound familiar? That’s the reality of modern data engineering; fast, unpredictable, and frankly exhausting. With ever-increasing data volume, operational complexity grows, making it even harder to maintain reliable and efficient systems.

Now imagine a world where these alerts start resolving themselves. Where the system notices a problem, fixes it, and keeps everything flowing. Sounds like science fiction? Enter agentic AI. These smart AI agents can observe, learn, and act across your data pipelines, handling tasks that once required a full team of engineers. Agentic AI is transforming the data landscape by automating the management of both structured data and unstructured data, ensuring higher data quality and reliability.

Let’s dive into what this really means and how it’s changing the way data is managed in 2025.

What Is Agentic AI in Data Engineering?

Agentic AI isn’t your typical automation tool. Think of it as a team member who never sleeps, always watching your data pipelines, learning patterns, and acting when something feels off.

Traditional automation follows rules — if X happens, do Y. Agentic AI, however, adapts and learns. If a data source changes format or a schema drifts, the agent notices and makes adjustments. It’s not guessing; it’s observing patterns and deciding the best course of action.

Why Agentic AI Matters

The benefits aren’t just hype. Teams using agentic AI report noticeable improvements in several areas:

- Pipelines that fix themselves: No more scrambling when a job fails. Agents detect and correct issues, keeping operations running.

- Learning on the fly: Agents adapt to changes in data sources without manual intervention.

- Better uptime: By handling anomalies quickly, pipelines stay available, and delays are minimized.

- Lower costs: Agents adjust processing to save compute and storage resources.

- Cleaner data: Missing values, duplicates, or strange anomalies are corrected automatically.

Imagine freeing your engineers from constant firefighting, that’s the real impact here.

The Role of Data Engineers in the Age of Agentic AI

The rise of agentic AI is reshaping the landscape of data engineering, but it’s not making data engineers obsolete—instead, it’s elevating their role. As AI systems take over routine tasks like monitoring data pipelines, handling schema changes, and automating data quality checks, data engineers are freed up to focus on the bigger picture.

Their expertise is now directed toward designing robust data infrastructure, architecting scalable data pipelines, and ensuring the delivery of high quality data that drives business value.

In this new era, data engineers are becoming strategic partners in business growth. They’re responsible for integrating agentic AI into data engineering workflows, optimizing data flows, and maintaining the integrity and reliability of the entire data ecosystem. Rather than spending hours troubleshooting or cleaning up after broken jobs, data engineers can now invest their time in innovation, advanced analytics, and building systems that anticipate future needs.

The key is learning to collaborate with agentic AI—leveraging its strengths to automate repetitive work, while applying human judgment and creativity to solve complex challenges and ensure that data engineering continues to deliver high-impact results.

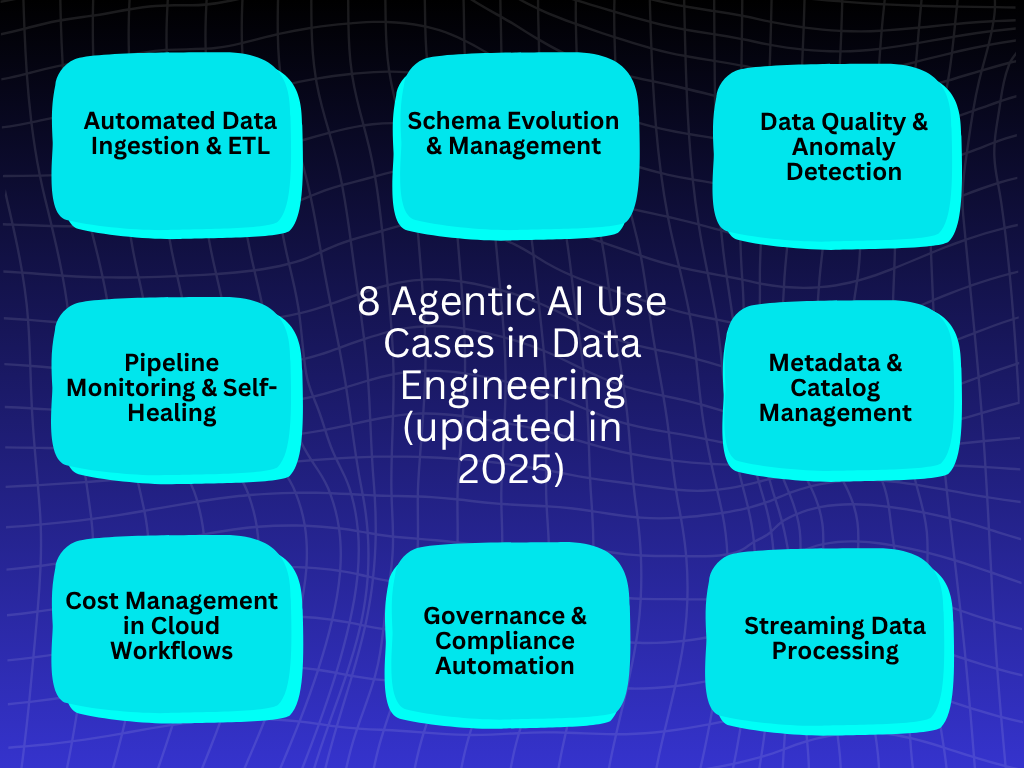

8 Agentic AI Use Cases in Data Engineering (updated in 2025)

Let’s break down the best use cases of agentic AI being used in data engineering today.

1. Automated Data Ingestion & ETL

Collecting and moving data is tedious. Multiple formats, frequent updates, and countless sources make it a nightmare to manage manually. Agentic AI can spot new data sources, extract the necessary fields, transform them correctly, and load them into your warehouse — all on its own.

A retail chain, for example, can have hundreds of store databases feeding into analytics. With agentic AI, the pipeline adapts automatically without engineers rewriting scripts for every new store or system.

2. Schema Evolution & Management

Ever had a pipeline fail because a column was added to a source table? Annoying, right? Agentic AI monitors schema changes and adjusts transformations on the fly, making sure pipelines don’t break.

It’s like having a guardian watching every schema change and quietly fixing any issues before anyone notices.

3. Data Quality & Anomaly Detection

Bad data can ruin reports, dashboards, and insights. Agentic AI keeps an eye out for missing values, duplicates, or unusual spikes and corrects them automatically.

For a hospital processing patient records, this means lab results and appointments are consistently accurate, avoiding delays or errors that could impact patient care.

4. Pipeline Monitoring & Self-Healing

When jobs fail, traditional systems just alert engineers. Agentic AI restarts jobs, reroutes tasks, or rebalances workloads automatically.

Financial institutions, for instance, benefit immensely when hundreds of dependent jobs run smoothly without manual intervention, reducing downtime and operational headaches.

5. Metadata & Catalog Management

Keeping track of what data exists, where it came from, and how it’s used is a challenge. Agentic AI tags datasets automatically, tracks lineage, and enriches metadata for easier discovery.

In manufacturing, sensor data from various plants can be cataloged without engineers manually writing descriptions, letting teams access and trust data faster.

6. Cost Management in Cloud Workflows

Processing large volumes of data in the cloud can get expensive. Agents monitor compute and storage usage, scale resources dynamically, and pause underutilized clusters.

Streaming workloads, like those in logistics or IoT, benefit because resources are used intelligently without constant human supervision.

7. Governance & Compliance Automation

Data privacy regulations are tough to keep up with. Agentic AI can flag privacy risks, monitor access controls, and suggest corrections to stay compliant with GDPR, HIPAA, or other rules.

Healthcare organizations can rely on agents to continuously check that patient data is handled correctly, reducing the risk of costly violations.

8. Streaming Data Processing

In industries where milliseconds matter, agentic AI manages streaming pipelines like Kafka, Flink, or Spark. Agents adjust processing rates, manage checkpoints, and balance resources so data flows uninterrupted.

A smart factory, for example, can process thousands of sensor events per second without human intervention, ensuring equipment anomalies are caught instantly.

Agentic AI for Code Generation and Review

Agentic AI is rapidly transforming how code is written and maintained in data engineering. With the help of advanced AI tools, data engineers can now automate code generation for data transformations, data integration, and other core data engineering workflows. This means less time spent writing boilerplate code and more time focusing on the architecture and logic that make data pipelines truly valuable.

But it doesn’t stop at writing code—agentic AI also excels at code review. These AI systems can scan for errors, flag inconsistencies, and suggest improvements based on best practices, ensuring that every piece of code meets high standards for reliability, scalability, and maintainability.

By automating both code generation and review, agentic AI empowers data engineers to accelerate development cycles, reduce human error, and maintain a strong focus on data governance and data science initiatives. The result? Data engineering teams can deliver robust, production-ready solutions faster, while keeping their attention on strategic projects that drive business growth.

Agentic AI in Data Cleaning and Preprocessing

Data cleaning and preprocessing have always been some of the most time-consuming—and critical—steps in data engineering. Agentic AI is changing the game by automating these essential tasks, ensuring that only high quality data makes it through the pipeline.

AI algorithms can now detect and correct errors, fill in missing data, and handle data inconsistencies with minimal human intervention. They can also perform complex data transformations, normalization, and feature engineering, all while learning from historical data patterns to improve over time.

By integrating agentic AI into data engineering workflows, teams can catch data quality issues early, reducing the risk of downstream errors and ensuring that analytics and machine learning models are built on a solid foundation. This not only boosts the reliability of data pipelines but also frees up data engineers to focus on more advanced challenges, such as optimizing data infrastructure or developing new data products. In short, agentic AI is making high quality data the norm, not the exception.

Data Access and Security in Agentic AI Workflows

As agentic AI becomes more deeply embedded in data engineering, ensuring secure and compliant data access is more important than ever. Data engineers are now tasked with building data pipelines that not only move data efficiently but also protect sensitive information at every step. Agentic AI can assist by continuously monitoring data access, detecting unusual activity, and responding to potential security threats in real time.

AI tools can also automate critical security tasks such as data encryption, access control, and auditing, making it easier for data engineers to enforce organizational policies and comply with industry regulations.

By embedding these security measures directly into agentic AI workflows, data teams can ensure that data remains protected without sacrificing agility or efficiency. Ultimately, this approach builds trust in AI-driven data engineering, allowing organizations to innovate with confidence while keeping their data assets safe.

Industry Snapshots: Where Agentic AI Is Already Working Hard

1. Finance

Banks are using AI agents tools that constantly study transaction patterns. When something suspicious shows up, a slightly altered payment trail or an unusual device fingerprint. It tweaks the detection model instantly and reroutes data flows to quarantine potential frauds. Most people don’t realize how much fraud prevention has moved beyond “rules.” It’s now about pipelines that learn by watching.

2. Healthcare

In hospitals, agentic AI takes over the messy job of pulling patient data from lab reports, wearable trackers, clinical databases. It doesn’t just collect; it understands context, labeling sensitive information properly to meet compliance before human review. This is how healthcare teams get clean, compliant data without drowning in manual checks.

3. Retail

In retail, AI agents don’t just serve “you might also like” suggestions. They monitor what’s trending across product streams, inventory shifts, and even customer mood data (yes, sentiment analysis is being baked into pipelines now). The twist? They adjust recommendations based on when shoppers are most likely to buy, not just what they like.

4. IoT & Manufacturing

Factories and IoT systems are full of sensors, all screaming for attention. Agentic AI listens quietly, learning what “normal” looks like for each machine. When a vibration pattern or temperature signal drifts from that baseline, it flags the anomaly and reroutes data to prevent downtime.

5. Media & Streaming

Media platforms use agentic AI to tag and route massive amounts of video, audio, and text data. The twist here is self-learning metadata. If one video gets labeled “sports interview,” and the system notices similar speech tones or visuals elsewhere, it starts labeling automatically.

6. Supply Chain

Agentic AI in logistics can monitor weather changes, route delays, customs issues, and automatically shuffle warehouse data and transport plans. Instead of long email chains to fix a delay, the system reorders priorities across databases and dashboards. Planners simply get a message: “Alternate route already assigned.”

7. Energy & Utilities

Energy companies are using AI agents to monitor load data and consumption forecasts. When energy spikes or dips, the system reshuffles load data automatically to keep everything balanced. What’s fascinating? Some systems even simulate “what-if” models and store backup plans before anything goes wrong.

8. SaaS & Tech Infrastructure

Data engineers everywhere dread messy data lakes. Agentic AI helps by auto-detecting redundant files, schema mismatches, and stale partitions — cleaning and compacting data quietly. Instead of weekend cleanups, engineers find their systems already organized when they log in Monday.

What’s the Common Thread?

Every one of these examples shares something simple, AI agents taking over the repetitive, error-prone work humans used to juggle manually.

It’s not about replacing people. It’s about giving teams a breathing space where machines handle the boring parts and humans handle the smart calls.

The biggest surprise? Many of these agents run on top of existing data stacks. They’re just plugging new intelligence into what’s already there.

Challenges to Keep in Mind

Now, let’s not pretend it’s all sunshine and automation. Agentic AI comes with its share of growing pains.

1. The black box problem.

Sometimes, even senior engineers don’t fully understand why an AI agent took a certain action. It might reroute data, reject records, or change thresholds — and explaining that to auditors isn’t always easy. Transparency is still catching up.

2. Security gray areas.

When agents can act on their own, they can also be tricked. Bad actors could exploit these systems if guardrails aren’t strong. That’s why most companies keep a “human in the loop” for approvals.

3. Setup takes real planning.

Plugging in an AI agent isn’t like installing an app. You need to plan data flow permissions, test response behaviors, and decide how much control to give the agent. It’s a mix of design and discipline.

4. Humans are still the final line.

AI might handle a million signals per hour, but it can’t read business context like people can. Humans still guide what counts as “normal,” “urgent,” or “acceptable risk.” Without that, automation can run wild.

What the Future Holds

From 2025 to 2030, agentic AI is expected to:

- Coordinate across multiple agents to manage complex data architectures.

- Reduce human intervention in pipeline maintenance significantly.

- Actively predict and correct issues before they impact operations.

The shift is toward AI not just assisting, but orchestrating entire data systems, leaving humans to focus on strategy, innovation, and analysis.

Conclusion

Agentic AI is no longer an experimental technology. It’s changing the way data pipelines are built, monitored, and maintained. Engineers spend less time firefighting and more time thinking strategically. Data flows become more reliable, cost management becomes intelligent, and governance is easier to maintain.

Companies like Ampcome are helping teams implement these agentic AI solutions, ensuring that pipelines not only run smoothly but learn and adapt on their own. For data teams ready to embrace the next evolution in engineering, agentic AI isn’t just helpful, it’s essential.

FAQs

1. What are the main agentic AI use cases in data engineering?

Automated ETL, pipeline self-healing, metadata management, cost tracking, compliance monitoring, and streaming data processing are all core use cases.

2. How is agentic AI different from traditional automation?

Unlike rule-based automation, agentic AI learns and adapts to changes, handling unexpected situations without explicit human instructions.

3. Can agentic AI replace data engineers entirely?

Not completely. Humans are still needed for governance, complex troubleshooting, and strategic decisions.

4. Which industries benefit the most?

Finance, healthcare, retail, and IoT-heavy industries see significant advantages due to high-volume, fast-changing, and regulated data.

5. Which tools enable agentic AI in 2025?

AI orchestration platforms, cloud-native AI agents, and workflow automation tools with learning capabilities are key enablers.

Transform Your Business With Agentic Automation

Agentic automation is the rising star posied to overtake RPA and bring about a new wave of intelligent automation. Explore the core concepts of agentic automation, how it works, real-life examples and strategies for a successful implementation in this ebook.

More insights

Discover the latest trends, best practices, and expert opinions that can reshape your perspective

Contact us

.webp)