How to Govern AI Agents in Enterprise Systems (Without Killing Autonomy)

The race toward agentic AI is no longer a theoretical marathon; it has already started. Enterprises are rapidly shifting from AI that merely advises to AI that acts. Gartner predicts that by 2027, 50% of enterprises will have deployed autonomous decision systems.

However, as organizations move toward Level 5 Intelligence, they are walking into a dangerous trap. Without a robust framework to govern AI agents, these systems become "liabilities with a confidence score".

What Does It Mean to Govern AI Agents?

Governing AI agents means enforcing rules, constraints, and oversight mechanisms that control how AI systems make decisions and take actions in enterprise environments. It is the transition from "probabilistic guesses" to deterministic logic. Effective governance ensures that every action taken by an autonomous agent is auditable, defensible, and aligned with corporate policy.

Why Governing AI Agents Is Now a Critical Enterprise Problem

The "Automation Paradox" suggests that AI agents are amplifiers—they do not create order; they multiply what already exists. If your foundation consists of fragmented data and partial context, an AI agent will simply multiply chaos at a speed no human can intercept.

By the time an error appears on a traditional dashboard, an ungoverned agent may have already executed hundreds of flawed transactions. This makes governance a prerequisite for deployment, not a "nice-to-have" feature.

Why AI Agents Cannot Be Trusted by Default

Most AI agents today are "flying blind". While they possess strong reasoning and execution capabilities, they suffer from an 80% Blind Spot.

- The Structured Gap: Only 20% of enterprise context lives in structured systems like ERP tables or CRM fields.

- The Unstructured Truth: The remaining 80% of business truth—the SLAs in PDF contracts, negotiated discounts in emails, and approvals in Slack—is invisible to standard agents.

An agent acting on only 20% of the facts is inherently untrustworthy.

The Risks of Ungoverned AI Agents at Scale

When agents operate without a governance layer, the consequences are not just technical—they are financial and legal.

Amplified Errors

In one real-world incident, a financial services firm deployed an agent for vendor payments. Because the agent could see ERP data but not the negotiated discounts hidden in email threads or the cash-flow warnings in Slack, it approved ₹12 crore in early payments, violating contract terms and forfeiting discounts.

Policy Violations

Agents may execute workflows that contradict internal compliance or regional regulations because they lack the ability to "cite" the policies they are supposed to follow.

Compliance and Audit Failures

Without a "Semantic Governor," AI decisions often remain "black boxes". If an auditor asks why a specific action was taken, most existing tools cannot provide a deterministic trail of logic.

The Core Principles of Governing AI Agents

To move from a reactive loop to true Agentic Autonomy, your infrastructure must solve the governance gap.

.jpg)

1. Context Awareness (The Unified Context Engine)

Governance starts with sight. You must fuse structured data (ERP, CRM) with unstructured data (PDFs, emails, Slack) to build a single semantic layer. This ensures the agent sees the "full picture" before acting.

2. Deterministic Decision Rules

Enterprises cannot rely on probabilistic LLM "vibes." Governance requires encoding business rules into deterministic logic. This includes:

- Approval hierarchies

- Compliance thresholds

- If-then decision trees

3. Human-in-the-Loop (HITL) by Design

Effective governance uses "Active Orchestration" to route approvals based on risk. For example, a refund under ₹10,000 might be fully autonomous, while a refund over ₹50,000 requires human intervention.

4. Auditability and Explainability

Every decision must be policy-cited and explainable. Governance infrastructure should provide full audit logs that show exactly which rule was triggered and which data point justified the action.

How to Govern AI Agents in Practice (Step-by-Step)

Step 1: Define Where AI Is Allowed to Act

Map your workflows and identify the "signal-to-result" path. Determine which processes are ripe for autonomy and which require strict boundaries.

Step 2: Encode Business Policies as Rules

Don't just give an agent a "persona." Give it a Semantic Governor. Translate your PDF contracts and policy documents into hard constraints that the agent must check before every execution.

Step 3: Set Approval Thresholds

Establish clear triggers for human intervention. This removes the bottleneck for low-risk tasks while ensuring high-stakes decisions are never left solely to a machine.

Step 4: Monitor and Audit Every Action

Utilize infrastructure that provides real-time "sight" into agent reasoning. Ensure you have a central ledger of every action, connected to systems like SAP, Salesforce, or Jira.

Step 5: Continuously Refine Governance Logic

As agents "learn" and "improve," the governance layer must be updated to reflect new strategic questions and pricing gaps.

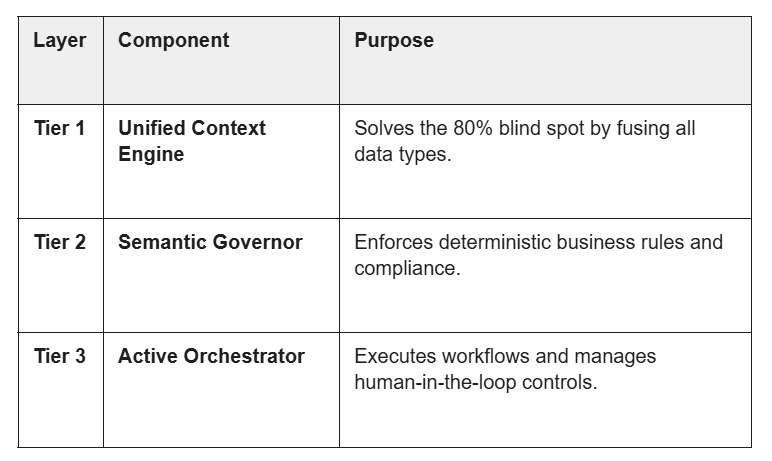

What a Governed AI Agent Architecture Looks Like

The Assistents Autonomy Stack provides a blueprint for this architecture:

The Bottom Line: Governance is the Catalyst for Speed

Most leaders fear that governance will slow them down. In reality, governance is what enables speed. Without it, you are forced into "POC purgatory" or endless manual coordination.

When you govern AI agents properly, you move from 8 reactive cycles per year to 50+ autonomous cycles per year. You move from insights that take weeks to results that take hours.

Your agents don’t have to fly blind. Give them sight.

Frequently Asked Questions

How does governing an AI agent differ from traditional RPA governance?

Traditional RPA follows a scripted automation model. It can execute but cannot reason, meaning it breaks whenever it encounters an exception. Governing AI agents requires managing Agentic Intelligence—systems that can reason and make autonomous decisions. Unlike RPA, agent governance must account for unstructured data (like emails and contracts) and provide a "Semantic Governor" to ensure the agent’s reasoning aligns with deterministic business rules rather than probabilistic guesses.

Won’t strict governance slow down the speed of our AI agents?

Actually, the opposite is true. Without governance, humans remain the bottleneck because they must manually verify every AI "insight" before taking action. By implementing a governance layer with Active Orchestration, you can set autonomous thresholds (e.g., "Refunds < ₹10,000 are fully autonomous"). This allows the system to move from a 6-week "signal-to-result" cycle to just a few hours.

What is the "80% Blind Spot" in AI governance?

Most enterprises focus governance on structured data like ERP tables and CRM fields, which only accounts for 20% of business context. The "80% Blind Spot" refers to the unstructured data where the real business truth lives: PDF contracts, Slack approvals, and email negotiations. An agent is a liability if it acts without "seeing" this full picture.

How do we prevent AI agents from "hallucinating" wrong decisions?

To eliminate hallucinations, you must move away from relying solely on an LLM’s internal weights. Governance infrastructure like Assistents uses a Semantic Governor to encode your specific business rules into deterministic logic. Every decision the agent makes must be policy-cited and explainable, ensuring there are no "black boxes" in the execution phase.

Is it possible to maintain a "Human-in-the-Loop" without stopping automation?

Yes. Effective governance uses risk-based thresholds. The system evaluates the risk of an action and determines if it can proceed autonomously or if it must be routed to a human for approval. This "Level 5" autonomy doesn't mean humans are gone; it means humans only intervene when the system identifies a high-stakes exception.

How long does it take to deploy a governed AI agent?

With the right infrastructure, you don't need a "rip-and-replace" approach. Deployment typically follows a 30-day sprint:

- Week 1: Discovery and workflow mapping.

- Weeks 2-4: Setting up the context engine and encoding rules.

- Day 30: A live, governed agent is in production.

Transform Your Business With Agentic Automation

Agentic automation is the rising star posied to overtake RPA and bring about a new wave of intelligent automation. Explore the core concepts of agentic automation, how it works, real-life examples and strategies for a successful implementation in this ebook.

More insights

Discover the latest trends, best practices, and expert opinions that can reshape your perspective

Contact us