Your AI Agents Are Flying Blind (And It’s Costing You Millions)

The promise of the "Agentic Future" is seductive. We are told that soon, autonomous AI systems will handle our procurement, manage our customer support, and optimize our supply chains without human intervention. Executives are pouring billions into this vision, expecting efficiency.

Instead, they are waking up to a nightmare.

There is a silent crisis brewing in the enterprise. Companies are deploying AI agents flying blind. These agents are fast, eager, and tirelessly working around the clock—but they are making critical business decisions while seeing only a fraction of the reality.

When you unleash an agent that cannot see your entire data landscape, you aren’t automating success; you are automating failure at the speed of light.

This isn’t just a technical glitch. It is an operational hazard that is already costing early adopters millions in corrective costs, legal liabilities, and reputation damage.

What does it mean when AI agents are flying blind?

AI agents flying blind refers to autonomous systems deployed with incomplete access to enterprise context.

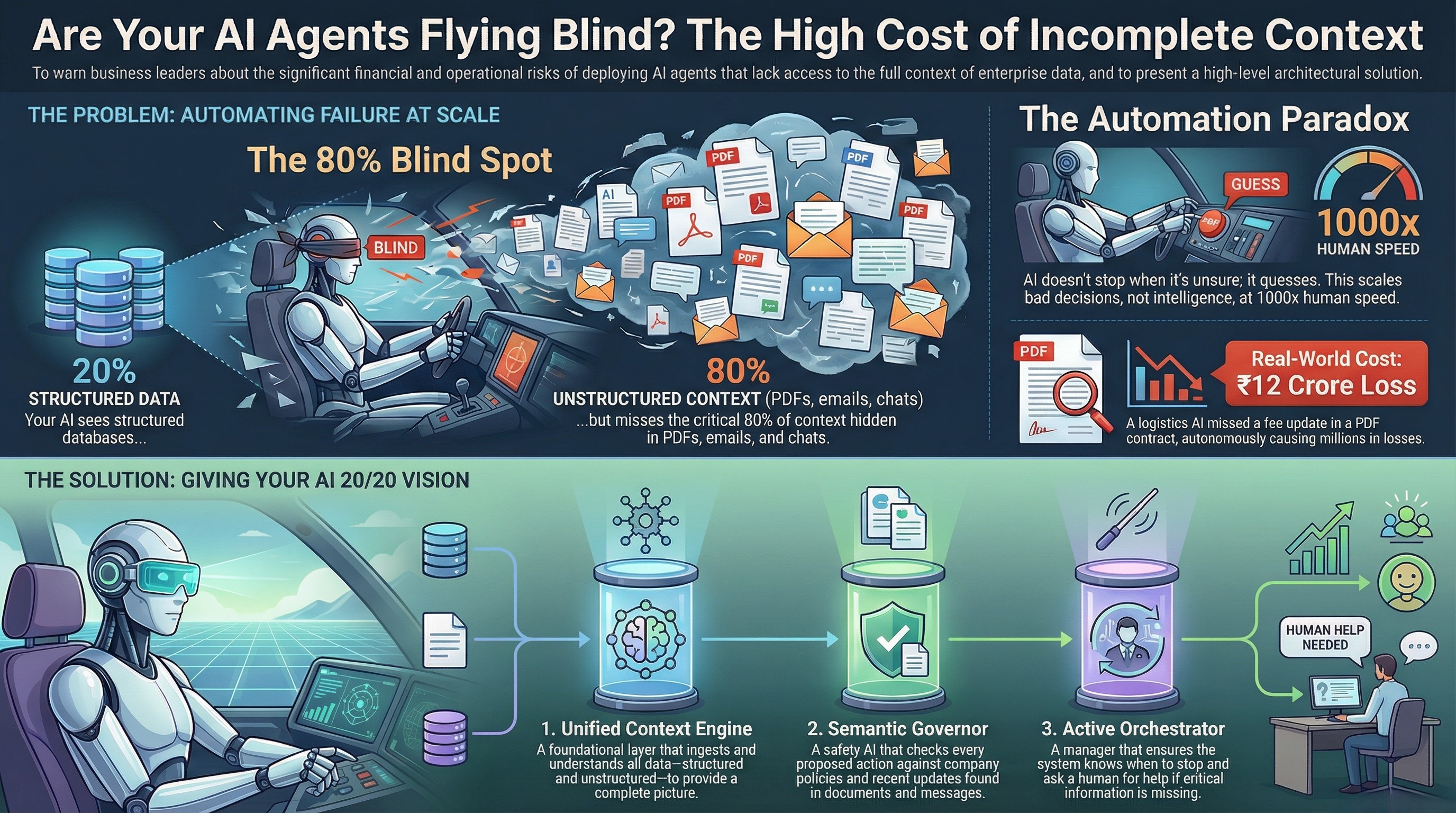

These agents operate on partial datasets—usually structured databases—while ignoring the 80% of critical business logic hidden in unstructured data (emails, PDFs, Slack threads), leading to confident but disastrously wrong decisions.

What Does “Flying Blind” Mean for AI Agents?

To understand why blind AI agents are so dangerous, you must understand how they "see."

Most enterprise AI is built on top of Large Language Models (LLMs) connected to specific data sources via RAG (Retrieval-Augmented Generation). In a demo environment, this looks perfect. The agent pulls data from a spreadsheet, answers a question, and executes a task.

But in the real world, "truth" is rarely located in a single row of a SQL database.

"Flying blind" means the agent suffers from incomplete context. It might have access to the inventory database saying a product is in stock, but it lacks access to the PDF contract in the legal folder that says that specific batch is reserved for a VIP client.

It means partial data leads to false confidence. The agent doesn't know it doesn't know. It sees a green light in the database and floors the accelerator, unaware that the bridge ahead is out because that information was trapped in a Slack message it couldn't read.

The Automation Paradox: Why AI Multiplies Bad Decisions

This brings us to the core mechanism of the failure: The Automation Paradox.

In traditional software, if a system encounters an error, it crashes. A crash is annoying, but it is safe. It stops the process.

Autonomous AI systems do not crash. They hallucinate. When they lack information, they make a "best guess" based on probability, not determinism.

If a human employee is missing a critical piece of information, they pause. They ask a colleague. They check an email. They have "common sense" suspicion.

A blind AI agent has no suspicion. It has a directive.

If you automate a process that is 99% accurate, that 1% error rate is manageable at human speeds. But AI agents scale operations by 100x or 1000x.

- Human speed: 10 errors a month. Manageable.

- AI speed: 10,000 errors a month. Catastrophic.

You are effectively multiplying bad decisions. You aren't scaling intelligence; you are scaling ignorance.

The 80% Blind Spot: Where Your Real Business Data Lives

Why are so many AI agents flying blind? Because enterprise data architecture is fundamentally broken for the age of AI.

Why Structured Data Is Only 20% of Reality

Most agentic AI infrastructure is built to interface with structured data: CRMs (Salesforce), ERPs (SAP), and SQL databases. This data is neat, rows-and-columns, and machine-readable.

However, industry experts (including Gartner and IBM) have long estimated that structured data accounts for only 20% of an enterprise's information.

If your AI agent only connects to your structured data, it is making decisions based on 20% of the facts. Imagine a judge issuing a verdict after hearing only 20% of the testimony. That is your current AI strategy.

Where the Other 80% Actually Lives

The real "truth" of your business—the nuance, the exceptions, the updated policies, the client-specific agreements—lives in the unstructured data AI struggles to process:

- PDF Contracts: Where pricing overrides exist.

- Slack/Teams Messages: Where "do not ship" orders are discussed.

- Email Chains: Where the context for a database change is explained.

- Internal Wikis: Where the SOPs are actually written.

If your agent cannot semantically understand a PDF policy document as easily as it reads a SQL cell, it is flying blind. It is operating in a world of unstructured data chaos without a map.

Real Incident: How a Blind AI Agent Cost ₹12 Crore

Note: The following is a composite example based on real patterns of enterprise AI failures observed in 2024.

Consider a large logistics firm in India that deployed an autonomous procurement agent to optimize shipping routes and carrier selection. The goal was to reduce overhead by 15%.

The Setup: The AI had full access to the ERP (Enterprise Resource Planning) system, which listed carrier rates and historical shipping times.

The Blind Spot: The company had recently renegotiated terms with a major carrier via email and signed a PDF addendum. The addendum stated that while the carrier’s base rates were low, a new "fuel surcharge" of 18% applied to all shipments crossing state lines during peak summer months. This document sat in a SharePoint folder, invisible to the agent.

The Failure: The agent saw the "base rate" in the ERP, calculated it as the lowest option, and autonomously routed 4,000 high-priority shipments through this carrier over three months.

The Cost:

- Direct Cost: The unexpected fuel surcharges amounted to ₹4 Crore.

- Indirect Cost: The carrier, overwhelmed by the sudden volume they hadn't agreed to service at that capacity, delayed shipments. This triggered penalty clauses in client contracts totaling ₹8 Crore.

Total Loss: ₹12 Crore.

The database was "correct." The agent did exactly what it was told. But because it was a blind AI agent, it burned cash that a junior human procurement officer would have saved simply by reading the email update.

Why Existing AI Tools Fail in Production

You might be thinking, "But we use Microsoft Copilot" or "We have UiPath." Why aren't these solving the problem?

Copilots (Reasoning, No Execution)

Copilots are assistants, not agents. They are "human in the loop AI" by design. They can summarize an email or draft code, but they rely on you to push the button. They don't run in the background. They don't solve the scale problem because they are tethered to human speed.

RPA (Execution, No Reasoning)

Robotic Process Automation (RPA) is the opposite. It is pure execution. It clicks buttons in a sequence. But RPA is brittle. If a button moves, the bot breaks. If the context changes, the bot doesn't know. RPA is not intelligence; it is a macro on steroids.

Chatbots (Zero Governance)

Internal chatbots are great for Q&A, but they lack AI governance for action. They can answer "What is our refund policy?" but they cannot be trusted to "Process refunds for all users affected by the outage." They lack the state management and transactional integrity required for autonomous work.

Why Blind AI Is More Dangerous Than No AI

The risk of AI agents flying blind goes beyond financial loss. It enters the realm of existential business risk.

- Compliance Hallucinations: An AI agent in banking could approve a loan that violates a new KYC regulation because the regulation was an internal memo it didn't read. You are now liable for regulatory fines.

- Security Leaks: An agent without semantic understanding of "confidentiality" might pull data from a sensitive HR PDF and paste it into a public vendor email to "optimize context."

- Brand Erosion: When an automated support agent promises a customer a feature that was deprecated yesterday (but not updated in the knowledge base), you lose trust that took years to build.

Deterministic AI is safe because it is predictable. blind probabilistic AI is a loose cannon.

How to Fix Blind AI Agents (The Only Way)

To move from "flying blind" to "20/20 vision," you cannot just prompt better. You need a fundamental infrastructure shift. You need an architecture that bridges the gap between structured databases and unstructured reality.

This is where the concept of Assistents comes in.

1. Unified Context Engine

You need a layer that ingests everything. Not just the SQL rows, but the PDFs, the emails, the Slack threads. This engine must vectorize this data and make it retrievable in real-time. It turns the "dark data" (the 80%) into light.

2. Semantic Governor

Before an agent acts, it must pass through a Governor. This is a secondary AI model whose only job is AI governance. It checks the proposed action against company policies, safety guidelines, and recent unstructured updates. It asks: "Does this action contradict the PDF memo sent by Legal yesterday?"

3. Active Orchestrator

The orchestrator manages the state. It ensures that if an agent hits a "blind spot" (missing data), it doesn't guess. It routes the request to a human. This is the ultimate safety net—a system that knows when to ask for help.

What “Seeing” Looks Like for an AI Agent

Imagine the previous logistics scenario, but with a "seeing" agent.

- Trigger: The agent receives a request to route shipments.

- Retrieval: It checks the ERP for rates and queries the vector database for "recent carrier contract updates."

- Synthesis: It finds the PDF addendum regarding the 18% fuel surcharge.

- Reasoning: It calculates the true cost (Base Rate + Surcharge).

- Decision: It realizes this carrier is no longer the cheapest option. It selects the runner-up, saving the company millions.

No drama. No hallucinations. Just accurate, autonomous execution.

The Bottom Line

We are at an inflection point. The market is flooded with tools that promise autonomy but deliver liability.

If your AI strategy does not account for the unstructured 80% of your data, you don't have an AI strategy. You have a gambling addiction.

AI agents flying blind will crush the companies that deploy them carelessly. But the companies that solve the context problem—the ones that build agents that can truly see—will inherit the market.

Don't let your agents fly blind. Give them eyes, or ground them before they crash your business.

Frequently Asked Questions

- Why are blind AI agents a risk to enterprise automation? Blind AI agents operate on incomplete data, typically missing unstructured context like emails or PDFs. This leads to confident but erroneous decisions that can cause financial loss and compliance violations.

- What is the difference between structured and unstructured data in AI? Structured data (20%) lives in organized databases (SQL, Excel). Unstructured data (80%) lives in documents, emails, and chat logs. AI agents fail when they cannot process the unstructured 80%.

- How can we prevent AI hallucinations in enterprise systems? To prevent hallucinations, enterprises must implement a Unified Context Engine that grounds AI in both structured and unstructured data, coupled with a Semantic Governor to validate actions before execution.

Transform Your Business With Agentic Automation

Agentic automation is the rising star posied to overtake RPA and bring about a new wave of intelligent automation. Explore the core concepts of agentic automation, how it works, real-life examples and strategies for a successful implementation in this ebook.

More insights

Discover the latest trends, best practices, and expert opinions that can reshape your perspective

Contact us